There was a moment at a warehouse in the United States where a packing line that used to slow to a crawl at peak time hummed along, not because managers hired more people, but because a robot stopped hesitating. It picked, placed, nudged, and sorted with a level of judgement that looked less like a program and more like common sense. The trick was not a new arm, or a clever gripper, but a smaller, cheaper brain sitting inside the robot, running models that could see, reason and decide at once.

That brain has a name. Nvidia calls it Jetson Thor, a Blackwell-powered module and developer kit built to run big multimodal models at the edge, close to sensors and motors. Announced in August 2025, the AGX Thor developer kit is priced at $3,499 and the production T5000 modules are aimed at volume buyers. The technical pitch is simple, but profound. For the first time, robots can run large vision and language models, fuse camera, lidar and force data, and make near-instant decisions without always calling into the cloud. That lowers latency, raises reliability, and makes robots useful in real, messy workplaces.

Why that matters to business is easier to see on a factory floor than in a lab. Traditional industrial robots are excellent where the environment is fixed, and the task never changes. They are fast and precise, but brittle. A human-friendly robot that can adapt to a slightly different type of box, or work around a temporary obstruction, can serve where conventional automation cannot. For logistics and manufacturing firms that juggle variety, seasonality and labour shortages, that flexibility starts to look like real economic value. Early adopters have noticed. Big names such as Amazon Robotics, John Deere and several robot startups are already testing the platform, and logistics players are rethinking how they scale automation.

Investment flows are following capability. The industry around humanoids and advanced mobile robots saw large funding rounds this year, signalling that venture capital and corporates are more willing to put money behind the combination of new hardware and smarter software. When the computer moves on to the robot, development cycles shorten. Startups that once needed massive server farms to train perception models can now iterate faster by running inference and continual learning at the edge. That changes the calculus for investors. Instead of asking whether robots will ever work, they now ask which robots can pay for themselves this quarter.

There are real-world examples of what this enables. Boston Dynamics’ Stretch robot, designed for container unloading and case handling, moved from pilots to larger enterprise deals after logistics operators saw throughput gains that justified the price. DHL signed a memorandum to expand Stretch deployments by more than a thousand units, an unusual scale-up that shows how enterprise buyers will commit when the ROI is clear. Those deployments focus on high-frequency, narrow tasks where reliability matters more than versatility. Jetson-style edge brains promise to widen the set of such tasks.

Still, the path is bumpy. Power and thermal limits remain stubborn. Running powerful models in a small chassis requires clever cooling and careful energy budgeting. Batteries still cap continuous hours of operation. Then there is safety. When a robot can improvise, traditional safety certification that assumes fixed sequences stops being enough. Companies must design layered safeguards, human-in-the-loop fallbacks and extensive testing regimes to satisfy regulators and unions. Integration costs are not trivial. Enterprises must invest in systems integration, staff training and maintenance. Price tags are falling, but the total cost of ownership remains the decisive metric.

There are cultural and organisational hurdles too. Shop-floor supervisors remember past automation projects that promised huge productivity gains and delivered complexity instead. Trust is earned gradually. Many teams now use teleoperation as a bootstrap. Humans teach robots by demonstration, then the model generalises on the edge. That hybrid approach cuts risk, collects real production data, and speeds the shift from human control to autonomous action. It also builds confidence among workers, because the robot’s learning curve is visible and reversible.

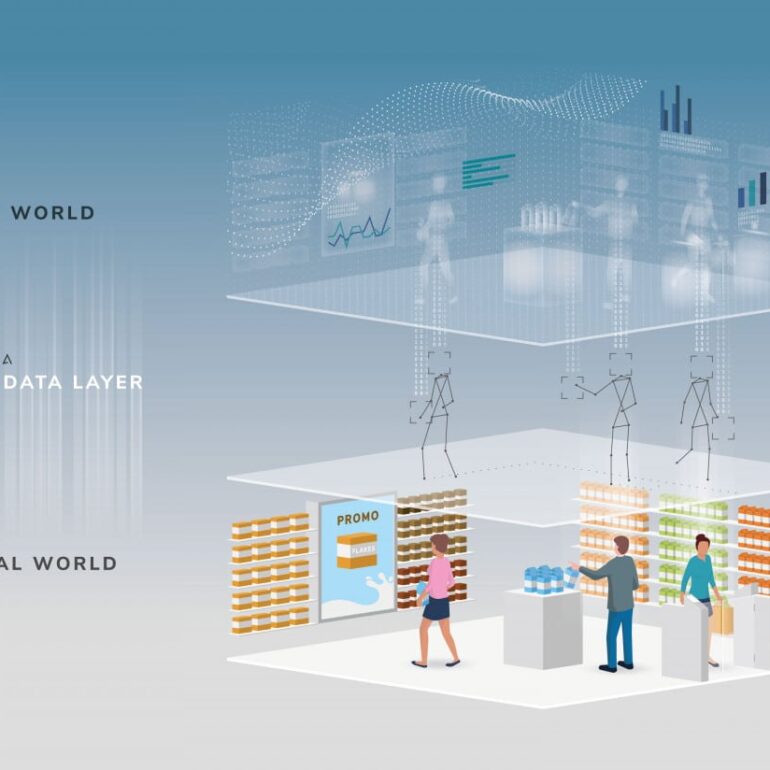

Two turning points will decide whether this era of “physical AI” scales. The first is standardisation. If developers agree on software stacks, modules and hardware interfaces, integration costs will fall, and a marketplace of components will flourish. Nvidia’s Jetson platform, with its Isaac and Metropolis toolsets, is trying to be that common layer. The second turning point is unit economics. Robots must demonstrate a clear, auditable improvement in throughput, quality or safety that offsets capex and integration costs. Projects that combine narrow tasks with high frequency, such as case handling, packing, or lineside delivery, are the easiest wins.

Back in the warehouse where the robot found its rhythm, the impact was small and human. A shift supervisor who had spent years manually balancing throughput smiled when she saw fewer jams at the pack station. She did not celebrate the robot. She celebrated the fact that the hectic hour before lunch, once a source of constant stress, could run on autopilot. For business leaders that is the point. Technology that reduces friction, that produces repeatable gains without tearing up the plant, is worth the money. The new class of edge brains, small and powerful, is making that possible.

If there is a single line to carry this piece home, it is this. Robotics has long been about stronger arms. The newest shift is about smarter, local brains. When those brains fit in a module that is affordable and reliable, robots stop being speculative experiments and start becoming tools that managers can plan around. The rest will be decided on the line, one update and one deployment at a time.