In less than three years, a small Paris lab turned into a global vendor banks want on-prem, carmakers want in their stack, and venture capitalists value at north of €10 billion. That company is Mistral AI, and its rise tells founders a clear story about timing, product craft and playing the long game on trust.

Origin, people and the first spark

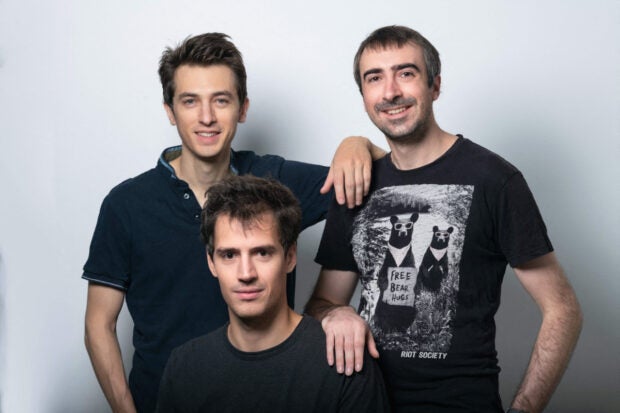

Mistral began in April 2023, when three French researchers, Arthur Mensch, Timothée Lacroix and Guillaume Lample, walked out of academia and research labs to build something practical and fast. They were sceptical of the slow pace of big incumbents and obsessed with making models that were both efficient and freely usable for developers. Early life was lean. The founding team worked from cramped offices, hiring a handful of engineers who shared one habit: relentless iteration on small models rather than chasing headline parameter counts.

Their first breakthrough was pragmatic. Instead of competing for the absolute biggest model, they focused on delivering a compact 7 billion-parameter model that punched above its weight in benchmarks and cost to run. Mistral 7B was released with an Apache 2.0 licence, a bold move that generated developer goodwill and rapid adoption because teams could experiment without vendor lock-in. That release, and the transparent engineering notes behind it, turned curiosity into real user traction. Within months, the startup had paying enterprise pilots and a short list of strategic partners.

What founders should study, and why it works

Focus on product leverage, not vanity metrics

Mistral’s playbook is product-first. The decision to build a high-performance, cost-efficient model rather than pursue the largest parameter count let them address a practical pain point: inference cost. That made Mistral attractive to enterprises balancing performance and budgets. The lesson is clear: build something customers can deploy affordably at scale.

Open by default, then monetise where trust matters

Releasing models under permissive licences acted like a marketing flywheel. Developers experimented, forks appeared, and enterprise interest followed. Mistral then layered commercial tooling and cloud-friendly deployment options for revenue. This hybrid approach lowered adoption friction while preserving multiple monetisation paths. It also positioned them as a trustworthy European alternative in a market sensitive to data residency and regulation.

Fundraising and investor dynamics

Mistral’s funding rounds were fast and large, propelled by a mix of strategic and financial investors who wanted a Europe-based AI anchor. By 2025, the company was closing deep rounds that implied a multibillion euro valuation, enabling heavy R&D spending on both model architecture and enterprise tooling. That capital cushion allowed Mistral to pivot from open model releases to offering hardened, supported commercial models for regulated sectors. Founders should note how timing, credibility and a clear road to enterprise revenue attract both VC and strategic heavyweights.

GTM and enterprise sales: solve a regulated problem

Mistral’s GTM moved from the developer community to regulated enterprises. Rather than pitching generic automation, they targeted concrete use cases where accuracy, privacy and traceability matter, for example, document analysis, code assistance and multilingual processing. Big banks and corporates began hosting Mistral models internally to avoid data leakage and regulatory risk. The recent multi-year deal with HSBC shows how that strategy pays off: enterprises will pay to self-host AI that meets governance rules.

Hiring, culture and near misses

Early hires were research-first but product-minded. The founding team insisted on small cross-functional squads that could ship and instrument models rapidly. That culture reduced time to learn, but it also meant painful trade-offs — some early model releases had to be pulled back or re-tuned when safety issues surfaced. The turning point was investing in real-world evaluation pipelines and enterprise-grade deployment tooling.

Positioning for the long game

Mistral is betting on being the European backbone for generative AI, focusing on sovereign deployments and partnerships with infrastructure players. If they keep delivering models that are efficient, auditable and easy to deploy, they can capture a slice of enterprise spend that values trust and cost efficiency over novelty.

Close

For founders, the Mistral story is a compact playbook: pick a practical product dimension, win developers with openness, then convert trust into enterprise contracts. Watch how they balance open research with commercial rigour. That balance may define who builds the next generation of AI infrastructure, and how fast incumbents adapt.