In January 2025, a little-known Chinese startup released an AI model that changed the conversation around artificial intelligence overnight. The model, called DeepSeek-R1, cost only about $294,000 to train. Within days, it was one of the most downloaded models in the world and had triggered serious questions about why AI development had become so expensive.

DeepSeek-R1 was not just another chatbot. It was a reasoning model, built to solve problems step by step instead of guessing answers quickly. More importantly, it delivered this capability at a fraction of the cost of comparable models from large US companies. For founders and investors, it sent a clear message: high-quality AI no longer needs billion-dollar budgets.

How DeepSeek-R1 came to life

DeepSeek’s roots are unusual. It did not begin as a startup chasing hype. It grew out of a Chinese hedge fund called High-Flyer, where founder Liang Wenfeng had been using AI models to guide trading decisions. By 2021, most of the fund’s trading was already powered by AI.

When US export restrictions limited China’s access to advanced chips, Liang and his team faced a hard constraint. They could not rely on the best hardware. Instead of scaling up, they decided to scale smarter. Their question was simple: how good can an AI model become if you redesign everything around efficiency?

DeepSeek was officially launched in 2023 with a small, focused team. Early models attracted attention mainly within China. The global turning point came with DeepSeek-R1 in early 2025.

Two decisions defined the breakthrough.

First, the model was designed to “think” before answering. It learned to reason by rewarding correct intermediate steps, not just correct final answers. This made it better at complex tasks like planning and analysis.

Second, the team used an architecture where only parts of the model activate for each task. This reduced computing costs drastically while maintaining strong performance.

Then came the boldest move. DeepSeek open-sourced the model and priced its API far below competitors. Suddenly, developers everywhere could access a reasoning-grade AI without paying premium prices.

The reaction was massive. Downloads surged. Developers began adapting the model for new use cases. Investors started questioning whether current AI infrastructure costs were justified.

What should we learn from DeepSeek-R1

How the model works, simply explained

Most AI language models predict the next word very fast. DeepSeek-R1 takes more time to think. It breaks problems into steps and processes only what is necessary for each task. This makes it well-suited for AI agents that plan, decide and act across workflows.

Where it is being used

Because the model is open and affordable, adoption has spread quickly:

Startups are fine-tuning it for specific industries like finance, law and manufacturing.

Smaller versions of the model can run on limited hardware, even on local servers.

Governments and enterprises are studying their cost structure while planning national AI programmes.

Business opportunities it enables

Lower AI costs change what is possible:

Products that were too expensive to run, like always-on AI assistants, now make financial sense.

Regulated industries can deploy AI on their own servers instead of using external cloud providers.

AI agent platforms become more viable when the “thinking cost” is low.

Competitive and regulatory realities

DeepSeek-R1 has increased pressure on closed AI providers to justify high prices. At the same time, some companies and governments remain cautious about adopting Chinese-origin models, especially in sensitive sectors. Founders will need to factor in trust, compliance and customer perception.

Challenges to keep in mind

Using open models still requires technical expertise. Safety testing, monitoring and deployment are not trivial. Policy also vary by geography.

Why this matters

DeepSeek-R1 proves that advanced AI is no longer limited to a few deep-pocketed players. Reasoning models can be efficient, open and affordable. This shifts the competitive advantage away from raw model size toward application design, domain knowledge and distribution.

For founders, the lesson is clear. Treat powerful reasoning models as a baseline. Focus on what you build on top of them, how they fit into real workflows and how they create lasting value. The future of AI will be shaped less by who builds the biggest model and more by who uses intelligence wisely.

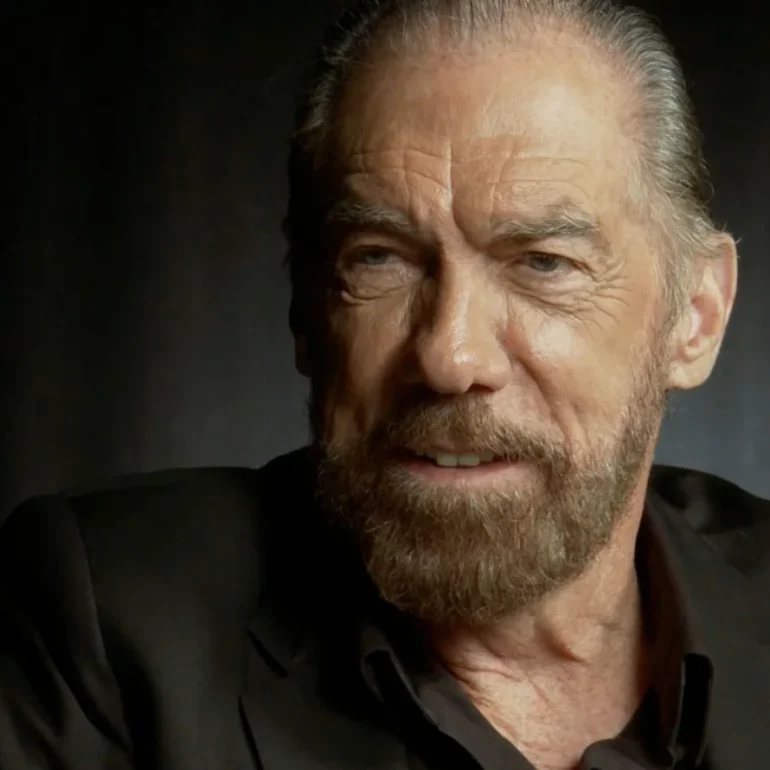

Liang Wenfeng | Founder & CEO, DeepSeek

Born in 1985 in Wuchuan, Guangdong, Liang Wenfeng is based in Hangzhou. Liang leads DeepSeek as Founder and CEO, steering it as a research-first AI lab. He also co-founded High-Flyer, one of China’s most powerful AI-native quantitative hedge funds.

His estimated net worth is around US$11 billion as of 2025, largely from High-Flyer and his majority stake in DeepSeek.

During the 2008 financial crisis, Liang began experimenting with machine learning, which led to High-Flyer in 2016. Profits from quant trading later funded long-term investments in GPUs and AI infrastructure, setting the stage for DeepSeek in 2023.

Liang is intensely low-profile and research-led. He believes China’s real AI edge will come from original thinking, not copying what already exists.